Automated Hatch Panel Placement

Inspiration

This was done for the 2019 FRC game Deep Space, where part of the game involved placing these circular acrylic panels called "hatch panels" onto a certain element of the field called the "cargo ship", to patch the holes in the ship. We knew from the beginning we wanted to automate the process of lining up with the cargo ship and placing the hatch panel, as the tolerances for this were very tight (you basically had to place them straight on).

How it Works

It uses a Limelight to find the cargo ship and determine both how far away we are from it and our orientation in relation to it. A Limelight is an all-in-one computer vision platform that runs the computer vision pipelines we used. The pipelines are the bit of software that take the raw images from the camera and processes them to extract the information about the target.

Once we know how far away we are from the target (the spot to place the hatch panel on the cargo ship) and our orientation with respect to it, we can use our motion profiling software to define a path for the robot to autonomously follow to the target. Motion profiling is a controls algorithm that defines the movement of the robot as a series of steps, where each step defines the velocity, acceleration, and orientation of the robot at a certain time point. These steps are combined to make the path which our robot then follows.

As we approach the target, we automatically extend our hatch placing mechanism, retracting it upon contact, securing the hatch panel in place. Not only was this very reliable, it was also very useful as it allowed us to score game pieces, and thus points, much quicker than our opponents.

Challenges

An interesting challenge we had was that if we told the robot to automatically place the hatch panel while it was moving, it would go towards the cargo ship at an angle, which meant the hatch panel would end up getting stuck in the cargo ship. It was also going rather fast towards the end of the path (when it should be slowing down to place the hatch panel), causing it to crash into the cargo ship.

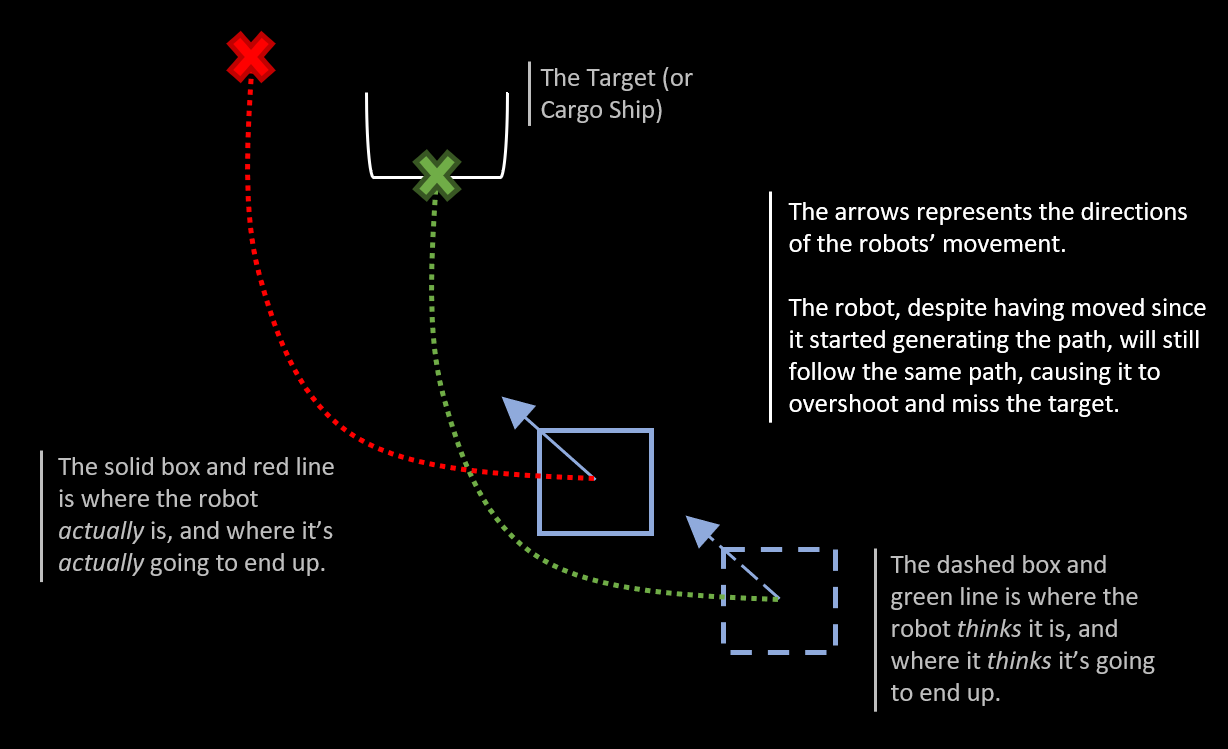

The issue here is because the robot was already in motion when it started to vision target and calculate a path, so in the time it took to obtain a path to follow it had moved a little bit, essentially offsetting the path that was generated, as shown below:

This is simply a lack of latency compensation, where the latency is the time it takes to find the target and calculate a path to it. To fix this, the robot would detect if it were within 2 feet from the cargo ship, where it would then manually shorten the path, eliminating the offset, to avoid crashing into the cargo ship. This is was one of the key things I worked on, and it ended up working quite well in competition.

What I Learned

Fixing the latency compensation issue was a valuable learning experience for me. Although I had heard of latency compensation, I had never seen it myself, let alone had to fix it. Learning about this issue and the approaches to solving it was very interesting.

Additionally, I got to learn a lot about computer vision that year. Originally, I tried using a Limelight and writing our own code to perform Pose Estimation on the target. However, despite the CV bit working quite well, there were other components that didn't quite work forcing us to drop Pose Estimation and come up with another solution.