Sky-High Memes

Inspiration

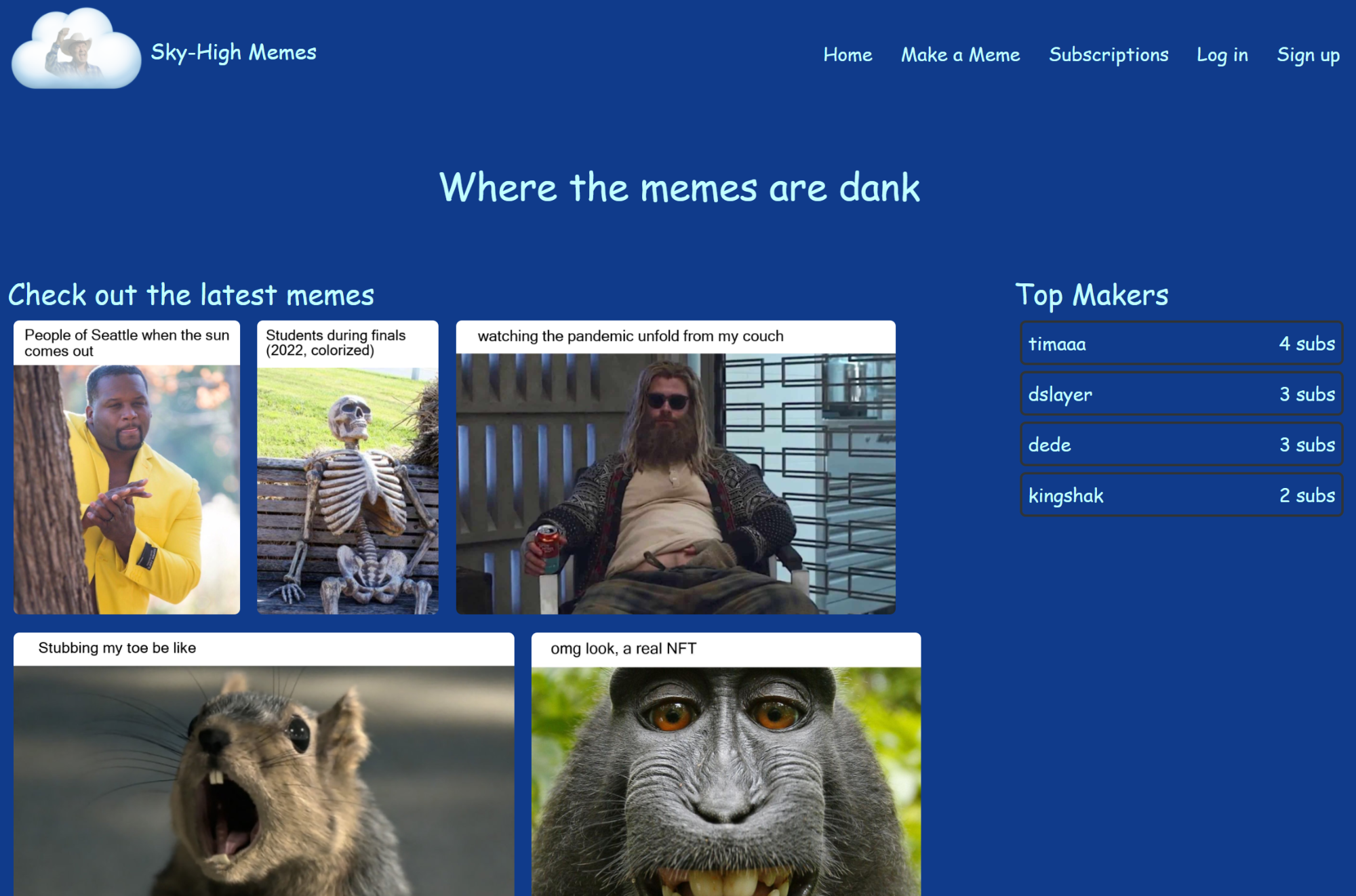

This project was done for my cloud computing class to get more experience working with cloud services. My team and I decided to make a website, Sky-High Memes, where you can create memes, build a portfolio for others to see, and subscribe to other meme creators. We chose this because we wanted something doable within the roughly 2-week timeframe we had (with other ongoing classes) that was fun and interesting.

We decided to use Amazon Web Services (AWS) to host our application using Elastic Beanstalk (EB). We also used some other AWS resources: an S3 bucket for storing memes (images), a CloudFront distribution for our S3 bucket (providing higher availability and performance for accessing memes), some DynamoDB NoSQL tables for storing user and meme information, AWS Simple Notification Service (SNS) for sending emails to subscribers when a new meme is created, and a AWS CodePipeline for continuous deployment (CD).

The application itself was written in Python, using the Flask framework on the backend and HTML/CSS with some JavaScript on the frontend.

My Contributions

My main contributions were setting up the production environment in AWS, implementing the user sign-up and sign-in functionality as well implementing almost everything in the backend, except for the code that makes the memes - I integrated that into the backend.

Setting Up Production Environment in AWS

As mentioned earlier, we decided to go with AWS and use several of its resources to build our application. Creating the resources and programmatically accessing them via the Python API was straightforward. The challenge was, this being a group project, figuring out how to share access to these resources with my team members.

For our development workflow, we had a GitHub repository hosting our source code. When someone wanted to work on a new feature or fix an existing one, they would create a new branch, do their work and test their changes on their machine, and finally push their work and merge their branch into the master branch, which triggers the CD pipeline and updates the EB environment.

The tricky bit is when they are building and testing locally, as they're using their own AWS credentials. I needed to share our AWS resources (the S3 bucket, DyanamoDB tables, etc.) with them so they could run and test the application locally on their machine using their AWS credentials.

This involved creating various roles and policies both on my AWS account and theirs using AWS's Identity and Access Management (IAM) service. Some services (e.g., S3) were easier than others (e.g., DynamoDB) to work out. Unlike S3, the DynamoDB tables required additional code for my team members to be able to access them programmatically. So I wrote some code for retrieving all the resources that would work regardless of whether it was running in the production environment, my machine, or any of my team member's machines, allowing my team members to focus on building the site rather than worrying about credentials and access.

User Sign-up and Sign-in Functionality

When you create a meme on the site, you have the option to save the meme to your own account. Other users can view your portfolio of memes and subscribe to be notified when you drop the latest meme. All of this required our application to be able to handle session management of a user.

To do this, I decided to go with a Flask extension named Flask-Login which takes care of session management. AWS has its own service called Cognito that comes with several extremely useful features, however, unlike Flask-Login, the documentation was sparse and given our timeframe, Flask-Login seemed to be the safest route.

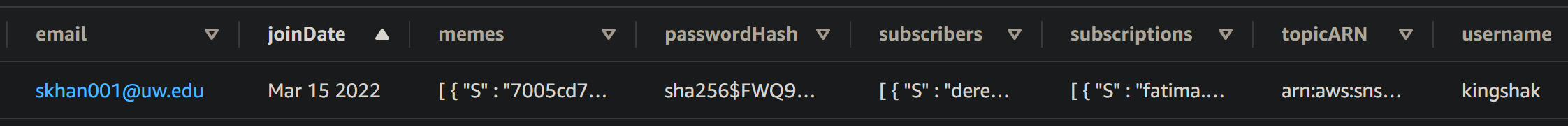

In addition to Flask-Login, I used an AWS DynamoDB table to store the user's information (email, username, hashed password, etc.). I chose DyanmoDB because it's a NoSQL database, and NoSQL databases don't have a fixed schema - they're flexible, which was especially useful to me a as I was making constant revisions to the database schema while I was building up the site.

The Backend

Lastly, I worked on several other aspects of the backend. I specified the URL space for the entire site and implemented nearly all the endpoints. This includes the functionality to view and create memes, view the portfolio of another user, and view your own subscriptions and memes. A lot of this was simply a matter of querying the DynamoDB tables and retrieving the appropriate data from the S3 store that would then be used to dynamically render a HTML template into a page that would be returned to the user.

What I Learned and Next Steps

One of the main challenges was sharing the AWS resources with the rest of the team. This was mainly due to sparse documentation. While AWS did have some guides on this, the scenario they covered was slightly different from mine, requiring a bit more digging and experimentation. In the end I was able to get a nice solution working and it was a nice opportunity to learn about IAM.

I got a lot more familiar and comfortable using cloud services (AWS in particular). The main reason I never used them before is that the pricing always confused me, and I didn't want to accidentally end up with a massive bill, so I'm glad I got to learn more about the pricing and how to interpret it during this course.

I also learned more about building web applications, particularly with Flask and Python. User session management was new for me and interesting to learn since most websites need it to function.

I also learned a lot more about continuous deployment (using the CodePipeline pipeline) and the actual development workflow for building cloud services. However, something I'd like to learn more about is the development workflows for groups working on a cloud-based service, as the one my group followed (creating and sharing all our AWS resource from one account) was really just the first thing that came to mind, making me wonder whether there are other, more efficient workflows.

Lastly, something else I'd like to learn more about is containers and how to deploy my own to an AWS environment. While I learned about them in class I never got to try them out myself, so this is something I'd like to explore. They seem to be a lot more convenient than having to configure a separate production environment, as you can just run the container instead.